Financial modeling is increasingly important to the banking industry, with almost every institution now using models for some purpose. Although the use of models as a management tool is a significant advance for the industry, the models themselves represent a new source of risk — the potential for model output to incorrectly inform management decisions.

Although modeling necessarily involves the opportunity for error, strong governance procedures can help minimize model risk by

- Providing reasonable assurance the model is operating as intended;

- Contributing to ongoing model improvement to maintain effectiveness; and

- Promoting better management understanding of the limitations and potential weaknesses of a model.

This article briefly discusses the use of models in banking and describes a conceptual framework for model governance. In addition, the article suggests possible areas of examiner review when evaluating the adequacy of an institution's model oversight, controls and validation practices.

Use of Models in the Banking Industry

Fundamentally, financial models describe business activity, predicting future or otherwise unknown aspects of that activity. Models can serve many purposes for insured financial institutions, such as informing decision making, measuring risk, and estimating asset values. Some examples:

- Credit scoring models inform decision making, providing predictive information on the potential for default or delinquency used in the loan approval process and risk pricing

- Interest rate risk models measure risk, monitoring earnings exposure to a range of potential changes in rates and market conditions

- Derivatives pricing models estimate asset value, providing a methodology for determining the value of new or complex products for which market observations are not readily available

In addition, models play a direct role in determining regulatory capital requirements at many of the nation's largest and most complex banking organizations. Some of these institutions already use value-at-risk models to determine regulatory capital held for market risk exposure.1 At institutions adopting the Basel II capital standards when finalized, financial models will have a much expanded role in establishing regulatory capital held for all risk types.

Not all models involve complex mathematical techniques or require detailed computer programming code. This does not, however, diminish their potential importance to the organization. For example, many banks use spreadsheets that capture historical performance, current portfolio composition, and external factors to calculate an appropriate range for the allowance for loan and lease losses. Although at first glance this may not appear to be a "model," the output from such spreadsheets directly contributes to preparation of the institution's reported financial statements, and some controls are necessary, given the seriousness of any potential errors.

Model Governance

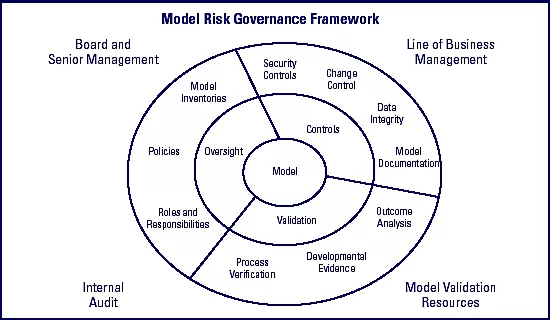

Institutions design and implement procedures to help ensure models achieve their intended purpose. The necessary rigor of procedures is specific to each model. An institution's use of and reliance on a model determines its importance and, in turn, establishes the level of controls and validation needed for that model. For some simple spreadsheet models, controls and validation may consist of a brief operational procedures document; password protection on the electronic file; and periodic review by internal audit for accuracy of the data feeds, formulas, and output reporting. While procedures will vary, certain core model governance principles typically will apply at all institutions (see Figure 1):

- The board establishes policies providing oversight throughout the organization commensurate with overall reliance on models.

- Business line management2 provides adequate controls over each model's use, based on the criticality and complexity of the model.

- Bank staff or external parties with appropriate independence and expertise periodically validate that the model is working as intended.

- Internal audit tests model control practices and model validation procedures to ensure compliance with established policies and procedures.

Figure 1

Supervisory Review of Models

With the industry's growing reliance on financial modeling, regulators are devoting additional attention to model governance.3 Examiners do not typically review controls and validation for all models, but instead select specific models in connection with the supervisory review of business activities where model use is vital or increasing.

The evaluation of model use and governance often becomes critical to the regulatory assessment of risk in the reviewed activities. For example, many banks have completely integrated the use of credit scoring models into their retail and small business lending. Model results play a significant role in underwriting, contributing to the decisions to make loans and price loans for credit risk. Model results also typically are used to assign credit risk grades to loans, providing vital information used in risk management and the determination of the allowance for loan and lease losses. Therefore, examiner assessment of credit risk and credit risk management at banks that use integrated credit scoring models requires a thorough evaluation of the use and reliability of the scoring models.

Although the supervisory review of model use and governance may sometimes require quantitative or information technology specialists for some complex models, examiners can perform most model reviews. Even when specialists are used, model review does not occur in isolation; the specialist's evaluation of mathematical theories or program coding is integrated into the examiner's assessment of model use. Regulatory review typically focuses on the core components of the bank's governance practices by evaluating model oversight, examining model controls, and reviewing model validation (see Figure 2). Such reviews also would consider findings of the bank's internal audit staff relative to these areas.

Figure 2

Model Oversight

When evaluating board and senior management oversight, examiners typically

- Review model governance policies to determine (1) if the policies are adequate for the bank's level of model use and control, and (2) if validation procedures used for individual models comply with established policies; and

- Review the bank's model inventory for accuracy and completeness.

Model policies: A single board-approved policy governing models may suffice for many banks, although those with greater reliance on financial modeling may supplement the board-approved policy with more detailed policies for each line of business. Such policies typically

- Define a model, identifying what components of management information systems are considered subject to model governance procedures;

- Establish standards for controls and validation, either enterprise-wide minimum standards or, alternatively, varying levels of expected controls and validation based on model criticality and complexity;

- Normally require verification of control procedures and independent validation of model effectiveness before a model is implemented;4 and

- Generally define the roles of management, business line staff, internal audit, information technology staff, and other personnel relative to model development and acquisition, use, controls, and validation responsibilities.

Model inventories: Banks of any size or complexity benefit from maintaining an inventory of all models used. The inventory should catalogue each model and describe the model's purpose, identify the business line responsible for the model, indicate the criticality and complexity of the model and the status of the model's validation, and summarize major concerns identified by validation procedures or internal audit review. Periodic management attestation to the accuracy and completeness of the model inventory is a strong practice to help ensure that the inventory is appropriately maintained.

Model Control Practices

When examining controls around individual models, regulators

- Review model documentation for (1) discussion of model theory, with particular attention to model limitations and potential weaknesses, and (2) operating procedures;

- Review data reconciliation procedures and business line analysis of model results; and

- Evaluate security and change control procedures.

By conducting their own review of model documentation and controls, examiners gain a stronger understanding of the model's process flow. This understanding enables examiners to test the findings of the bank's validation and internal audit review against their own observations.

Model documentation: Documentation provides a thorough understanding of how the model works (model theory) and allows a new user to assume responsibility for the model's use (operational procedures). Each model should have appropriate documentation to accomplish these two objectives, with the level of documentation determined by the model's use and complexity. Generally, elements of documentation include:

- A description of model purpose and design.

- Model theory, including the logic behind the model and sensitivity to key drivers and assumptions.

- Data needs.

- Detailed operating procedures.

- Security and change control procedures.

- Validation plans and findings of validations performed.

Data integrity: Maintaining data integrity is vital to model performance. Much of the information used in a model is electronically extracted or manually input from source systems; either approach provides opportunity for error. Business line management is responsible for the regular reconciliation of source system information with model data to ensure accuracy and completeness.5

Data inputs need to be sufficient to provide the level of data consistency and granularity necessary for the model to function as designed. Data lacking sufficient granularity, such as product- or portfolio-level information, may be inadequate for models that use drivers and assumptions associated with transaction-level data. For example, the robustness of an interest rate risk model designed to use individual security-level prepayment estimates could be compromised by the use of an average prepayment speed for aggregate mortgage-backed securities held in the investment portfolio.

Security and change control: Key financial models should be subject to the same controls as those used for other vital bank software. Security controls help protect software from unauthorized use or alteration and from technological disruptions. Change control helps maintain model functionality and reliability as ongoing enhancements occur.

Some level of security control is generally appropriate for all financial models. Security controls limit access to the program to authorized users and appropriate information technology personnel. Control can be maintained by limiting physical or electronic access to the computer or server where the program resides and by password protection. The institution should have backup procedures to recover important modeling programs in the event of technological disruption.

Change control may be necessary only for complex models. Such procedures are used to ensure all changes are justified, properly approved, documented, and verified6 for accuracy. Events covered by such procedures include the addition of new data inputs, changes in the method of data extraction from source systems, modifications to formulas or assumptions, and changes in the use of the model output. Typically, proposed changes are submitted for approval by business line management before any alterations to the model are initiated. To maintain up-to-date documentation, staff may log all changes made to the model, including the date of the change, a description of the change, initiating personnel, approving personnel, and verification.

When model importance and complexity are high, management may choose to run parallel models — prechange and postchange. Doing so will assist in determining the model's sensitivity to the changes. Changes significantly affecting model output, as measured by such sensitivity analysis, may trigger the need for accelerated validation.

Model Validation

Validation should not be thought of as a purely mathematical exercise performed by quantitative specialists. It encompasses any activity that assesses how effectively a model is operating. Validation procedures focus not only on confirming the appropriateness of model theory and accuracy of program code, but also test the integrity of model inputs, outputs, and reporting.

Validation is typically completed before a model is put into use and also on an ongoing basis to ensure the model continues to perform as intended. The frequency of planned validation will depend on the use of the model and its importance to the organization. The need for updated validation could be triggered earlier than planned by substantive changes to the model, to the data, or to the theory supporting model logic.

Examiners do not validate bank models; validation is the responsibility of the bank. However, examiners do test the effectiveness of the bank's validation function by selectively reviewing various aspects of validation work performed on individual models.7 When reviewing validation, examiners

- Evaluate the scope of validation work performed;

- Review the report summarizing validation findings and any additional work papers needed to understand findings;

- Evaluate management's response to the report summarizing the findings, including remediation plans and time frames; and

- Assess the qualifications of staff or vendors performing the validation.

This process is analogous to regulatory review of bank lending. When looking at loan files, examiners do not usually rely exclusively on the review work performed by loan officers and loan review staff, but also look at original financial statements and other documents to verify the loan was properly underwritten and risk graded. Similarly, examiners review developmental evidence, verify processes, and analyze model output not to validate the model, but to assess the adequacy of the bank's ongoing validation (see Figure 3).

Components of Validation:

- Developmental evidence: The review of developmental evidence focuses on the reasonableness of the conceptual approach and quantification techniques of the model itself. This review typically considers the following:

- Documentation and support for the appropriateness of the logic and specific risk quantification techniques used in the model.

- Testing of model sensitivity to key assumptions and data inputs used.

- Support for the reasonableness and validity of model results.

- Support for the robustness of scenarios used for stress testing, when stress testing is performed.

- Process verification: Process verification considers data inputs, the workings of the model itself, and model output reporting. It includes an evaluation of controls, the reconciliation of source data systems with model inputs, accuracy of program coding, and the usefulness and accuracy of model outputs and reporting. Such verification also may include benchmarking of model processes against industry practices for similar models.

- Outcome analysis: Outcome analysis focuses on model output and reporting to assess the predictiveness of the model. It may include both qualitative and quantitative techniques:

- Qualitative reasonableness checks consider whether the model is generally producing expected results.

- Back-testing is a direct comparison of predicted results to observed actual results.

- Benchmarking of model output compares predicted results generated by the model being validated with predicted results from other models or sources.

Figure 3

Expertise and independence of model staff: The criticality and complexity of a model determine the level of expertise and independence necessary for validation staff, as well as the scope and frequency of validations. The more vital or complex the model, the greater the need for frequent and detailed validations performed by independent, expert staff.

The complexity of some models may require validation staff to have specialized quantitative skills and knowledge. The extent of computer programming in the model design may require specialized technological knowledge and skills as well.

Optimally, validation work is performed by parties completely independent from the model's design and use. They may be an independent model validation group within the bank, internal audit, staff with model expertise from other areas of the bank, or an external vendor. However, for some models with limited importance, achieving complete independence while maintaining adequate expertise may not always be practical or necessary. In such cases, however, management and internal audit should pay particular attention to the appropriateness of scope and procedures.

Validation work can incorporate combinations of model expertise and skill levels. For example, management may rely on the bank's own internal audit staff to verify the integrity of data inputs, adequacy of model controls, and appropriateness of model output reporting, while using an outside vendor with model expertise to validate a model's theory and code.

Third-party validation: Vendors are sometimes used to meet the need for a high level of independence and expertise. They can bring a broad perspective from their work at other financial institutions, providing a useful source for theory and process benchmarking. When using external sources to validate models, appropriate bank personnel should determine that vendor review procedures meet policy standards and are appropriate to the specific model.

Banks sometimes use third parties for validation when they purchase vendor models. The validation of the model theory, mathematics, assumptions, and code for purchased models can be complicated, as vendors sometimes are unwilling to share key model formulas and assumptions or program code with clients. In such cases, vendors typically supply clients with validation reports performed by independent parties. Such work can be relied on if management has adequate information to determine the scope is adequate and findings are appropriately conveyed to and acted on by the model vendor. Management may also increase its comfort with vendor-supplied models through a greater emphasis on regular outcome analysis. However, management cannot rely exclusively on a vendor's widespread industry acceptance as evidence of reliability.

Supervisory Evaluation of Model Use and Governance

Bank management is responsible for establishing an effective model governance program to recognize, understand, and limit the risks involved in the use of these important management tools. The examiner's role is to evaluate model use and governance practices relative to the institution's complexity and the overall importance of models to its business activities. Examiners incorporate their findings into their assignment of supervisory ratings to the bank.

For example, regulatory guidelines for rating the sensitivity to market risk component under the Uniform Financial Institutions Rating System include an assessment of management's ability to identify, measure, monitor, and control exposure to changes in interest rates or market conditions.8 Any significant examiner concerns with the effectiveness of a model used to measure and monitor this risk, such as the failure to validate the model or a lack of understanding of model output, would have some negative effect on the rating. Conversely, if the model improves interest rate risk management, this would be positively reflected in the rating.

Other component ratings also can be influenced by model use, such as the evaluation of credit scoring models' effects on loan underwriting procedures and credit risk management in assigning an asset quality rating. The management component rating also may be influenced if governance procedures over critical models are weak.

The use of financial modeling in the banking industry will continue to expand. By necessity, supervisory attention to the adequacy of governance practices designed to assess and limit associated model risk also will increase.

Robert L. Burns, CFA, CPA

Senior Examiner

Potential bank governance practices and supervisory activities described in this article are consistent with existing regulatory guidance, but represent the thoughts of the author and should not be considered regulatory policy or formal examination guidance.

1 Institutions with $1 billion or more in trading assets are subject to the 1996 Market Risk Amendment to risk-based capital regulations.

2 Providing for appropriate controls may be the responsibility of senior management at smaller organizations.

3 OCC Bulletin 2000-16, "Risk Modeling," (May 30, 2000) is the primary source for formal regulatory guidance on model governance available at https://www.occ.gov/static/rescinded-bulletins/bulletin-2000-16.pdf.

4 Banks may sometimes face compelling business reasons to use models prior to completion of these tasks. For example, trading of certain complex derivative products often relies on rapidly evolving valuation models. Management may, in some instances, decide the potential return from such activities justifies the additional risk accepted through the use of a model that has not been validated. In such cases, management should

- Specifically approve the temporary use of an unvalidated model for the product.

- Formalize plans for a thorough validation of the model, including a specific time frame for completion.

- Establish limits on risk exposures, such as limiting the volume of trades that are permitted before validation is completed.

5 For example, the regular verification of data integrity for a value-at-risk model likely would include the following:

- Reconciliation of trading account exposures in source information systems with model inputs to ensure that all trading positions are being captured and accurately incorporated into the model.

- Reconciliation of model outputs with model inputs to ensure all data inputs are being appropriately used, with particular attention to handling missing, incomplete, or erroneous data fields that serve as risk drivers in the computation of value-at-risk for each trading position.

6 Optimally, all changes to models should be verified by another party to ensure the changes were made accurately and within the guidelines of the approval. This does not constitute validation, but merely verification that approved changes were made correctly.

7 This review may require the use of quantitative specialists, depending on the complexity of the model.

8 Relative to the evaluation of a bank's sensitivity to market risk, the FDIC Manual of Examination Policies states, "While taking into consideration the institution's size and the nature and complexity of its activities, the assessment should focus on the risk management process, especially management's ability to measure, monitor, and control market risk" available at www.fdic.gov/regulations/safety/manual/section7-1.pdf.